General

Welcome to the home page of the Introduction to Data Science Course. The course consists of short chapters that we will cover in each lecture. Before each lesson, please review the reading material and the videos. We will discuss the reading material in detail during the lesson. The lesson will finish with homework, where you will complete the quiz. To complete the quiz, you will most often need to do a small project, where you will analyze a dataset and answer the quiz's questions with the results of your analysis.

This course is offered in the xAIM's master's program. The course is co-financed by the European Commission instrument "Connecting Europe Facility (CEF) Telecom" through the project "eXplainable Artificial Intelligence in healthcare Management project" (2020-EU-IA-0098), grant agreement number INEA/CEF/ICT/A2020/ 2276680.

Copyright

This material is offered under a Creative Commons CC BY-NC-ND 4.0 license.

Software

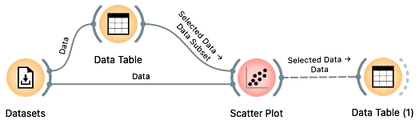

- Orange Data Mining, visit this webpage to install Orange and check out its documentation

Additional Material and Communication Channels

- Please join an IDS communication server on Discord for course-related announcements, discussions and Q&A.

- Lectures start on Friday, March 3rd, at 18.00 CET (sharp!) on Zoom.

Dates and Online Sessions

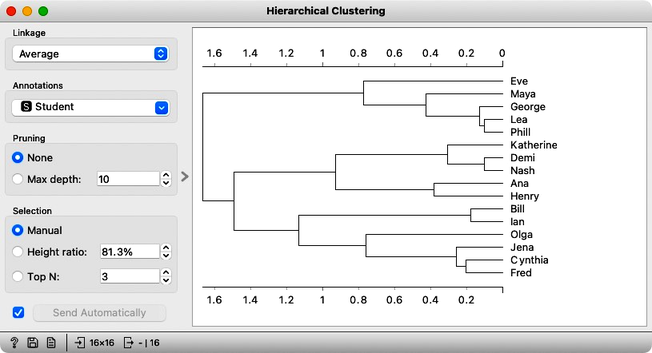

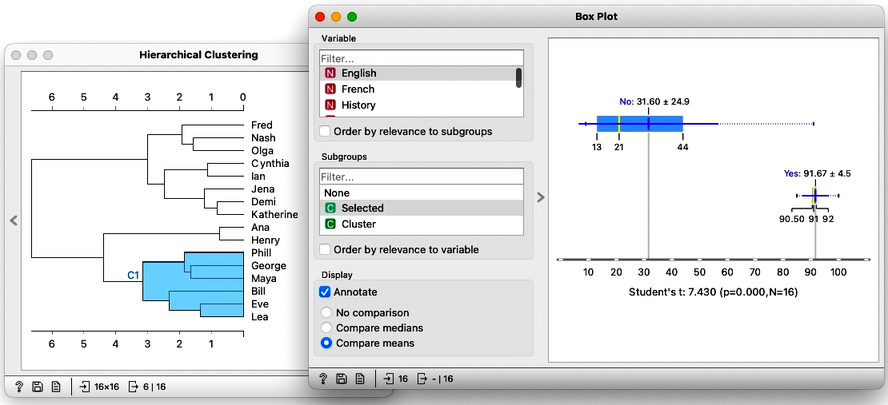

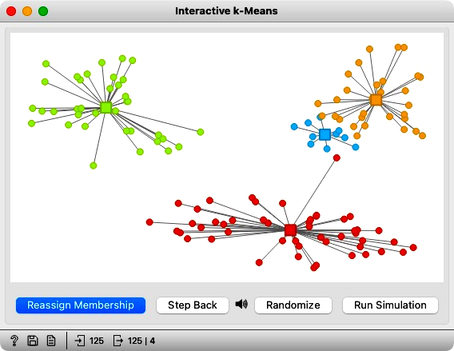

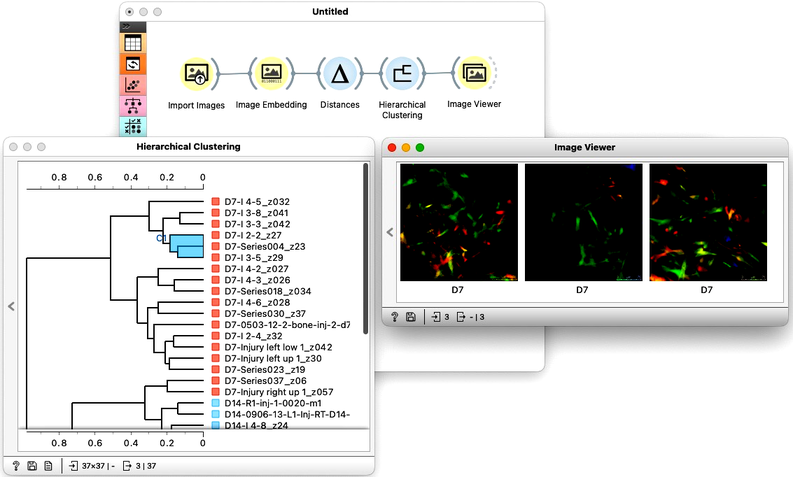

- Friday, March 3 (exploratory data analysis, clustering, cluster explanation)

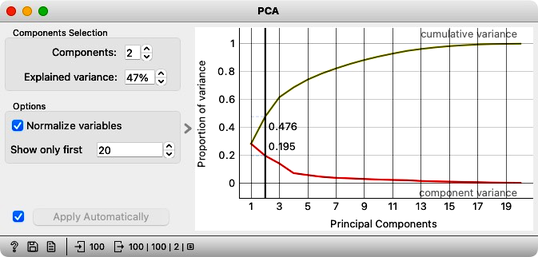

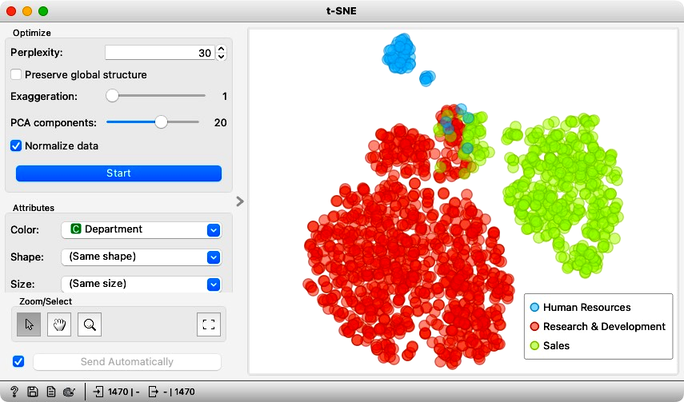

- Thursday, March 16 (dimensionality reduction, PCA and understanding of components, embedding and explanation of clusters)

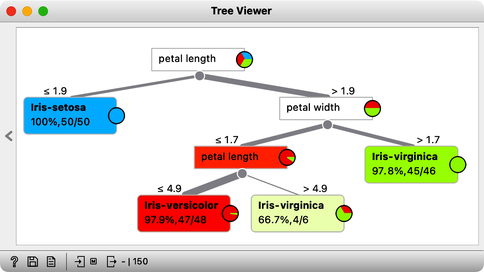

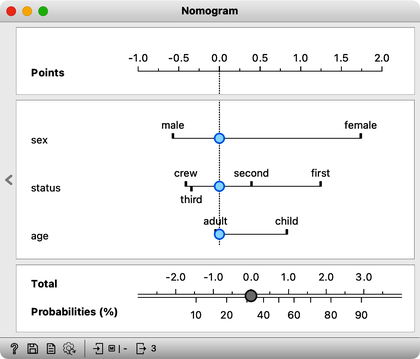

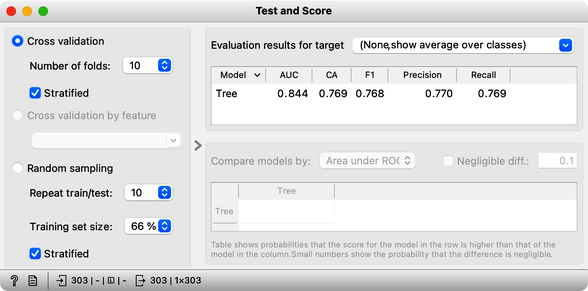

- Thursday, March 30 (classification, explanation with nomograms and other tricks)

Thursday, April 13 (regression, explanation with SHAP values)

Wednesday, June 7 (exam)

All online sessions will be on Zoom and will start at 18:00 CET. We will finish before 20:30.

Grading

- 70% Four homework assignments, one after online session

- 30% Exam, about 20 choice questions, lasting 1.5 hour